Posts

Elon Musk believes the best way to solve the difficulties of building AI data centers on earth is to move them into outer space. His merger this week of his rocket company SpaceX with his artificial intelligence company xAI could help get them there.

By: Engin Kayraklioglu, Brad Chamberlain

Part of a series: 7 Questions for Chapel Users

Welcome to the first interview in our 7 Questions for Chapel Users interview series for 2026! In this edition, we hear from Dr. Oliver Alvarado Rodriguez about his experiences using Chapel in his Ph.D. thesis to write Arachne, a graph analytics package for Arkouda. This article is a logical successor to our earlier interview with David Bader, who served as Oliver’s Ph.D. advisor. After Oliver graduated, we were very happy to have the opportunity to continue working with him within HPE’s Advanced Programming Team that Chapel is a part of.

by Dan Milmo

Will the race to artificial general intelligence (AGI) lead us to a land of financial plenty – or will it end in a 2008-style bust? Trillions of dollars rest on the answer.

By Clare Duffy

New York – There’s a giant question hanging over the tech industry: How long will its massive investments in AI infrastructure really last?

ST. LOUIS–(BUSINESS WIRE)–Supercomputing 2025 — Hammerspace, the high-performance data platform for AI Anywhere, today announced a breakthrough IO500 10-Node Production result that establishes a new era for high-performance data infrastructure. For the first time, a fully standards-based architecture — standard Linux, the upstream NFSv4.2 client, and commodity NVMe flash — has delivered a 10-node Production fully reproducible IO500 result traditionally achievable only by proprietary parallel filesystems.

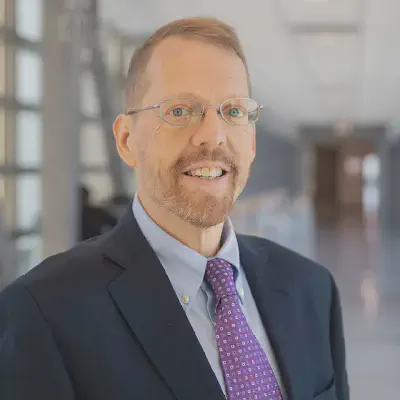

By David A. Bader

As someone who has spent decades optimizing parallel algorithms and wrestling with the complexities of high-performance computing, I’m constantly amazed by how the landscape of performance engineering continues to evolve. Today, I want to share an exciting development in our Fastcode initiative: leveraging Large Language Models (LLMs) as sophisticated analysis tools for software performance optimization.

By Dan Milmo and Dara Kerr

A significant step forward but not a leap over the finish line. That was how Sam Altman, chief executive of OpenAI, described the latest upgrade to ChatGPT this week.

Written by: Michael Giorgio

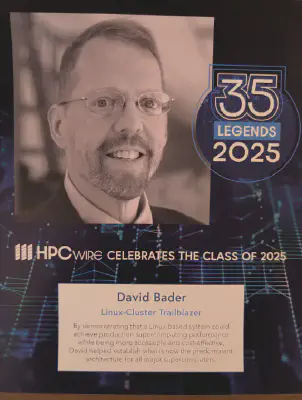

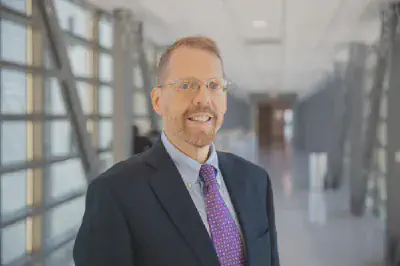

Distinguished Professor David Bader in the Ying Wu College of Computing’s Department of Data Science has been recognized among an elite roster of High-Performance Computing (HPC) pioneers in the HPCwire 35 Legends Class of 2025 list. The honorees are selected annually based on contributions to the HPC community over the past 35 years that have been instrumental in improving the quality of life on our planet through technology.

Written by: Michael Giorgio

Seven faculty members in the Ying Wu College of Computing (YWCC) have received grant support to fund eight research projects as part of the Grace Hopper Artificial Intelligence Institute at NJIT (GHRI). The Institute was launched through a $6 million investment by an anonymous donor and matching funds in collaboration with the university’s $10 million AI@NJIT initiative.

By Elizabeth MacBride, Senior Contributor. Business Journalist

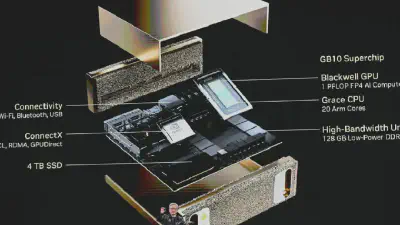

Nvidia is opening another distribution strategy for its GPU capacity, by establishing a network of IT consulting firms as partners to test and sell AI solutions. The partners use new Spark machines and sell Nvidia infrastructure to enterprises.

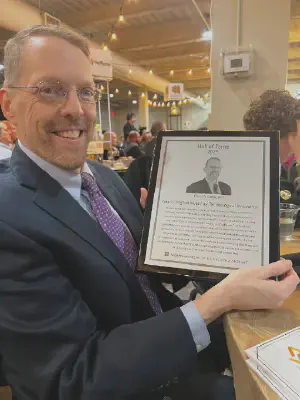

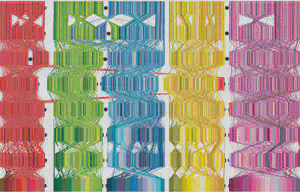

Written by: Evan Koblentz

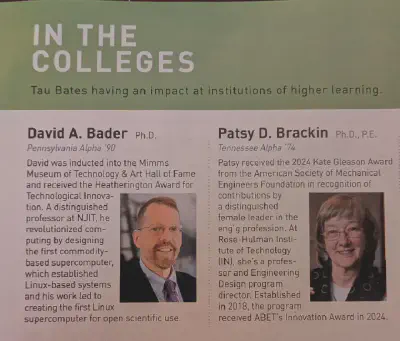

New Jersey Institute of Technology distinguished professor David Bader was inducted recently into the Mimms Museum of Technology and Art Hall of Fame, located near Atlanta.

ROSWELL, Ga. – (February 4, 2025) – Computer Museum of America (CMoA), a metro Atlanta attraction featuring one of the world’s largest collections of digital-age artifacts, today announced the induction of three new distinct members to its Hall of Fame. The trailblazing pioneers will be honored on March 6 during BYTE25, the museum’s largest fundraiser of the year.

![*[Photo: Patrick Pleul/picture alliance via Getty Images]*](/post/20250128-fastcompany/p-1-91268664-deepseek-has-called-into-question-big-ais-trillion-dollar-assumption_hu_2c6f342aa5cfaf03.webp)

By Mark Sullivan

Recently, Chinese startup DeepSeek created state-of-the art AI models using far less computing power and capital than anyone thought possible. It then showed its work in published research papers and by allowing its models to explain the reasoning process that led to this answer or that. It also scored at or near the top in a range of benchmark tests, besting OpenAI models in several skill areas. The surprising work seems to have let some of the air out of the AI industry’s main assumption—that the best way to make models smarter is by giving them more computing power, so that the AI lab with the most Nvidia chips will have the best models and shortest route to artificial general intelligence (AGI—which refers to AI that’s better than humans at most tasks).

by Rosalie Chan

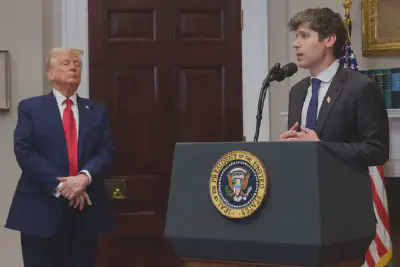

Since President Donald Trump took office Monday, he’s quickly made moves on advancing AI.

By Kif Leswing

Nvidia CEO Jensen Huang was greeted as a rock star this week at CES in Las Vegas, following an artificial intelligence boom that’s made the chipmaker the second-most-valuable company in the world.

By: Engin Kayraklioglu, Brad Chamberlain

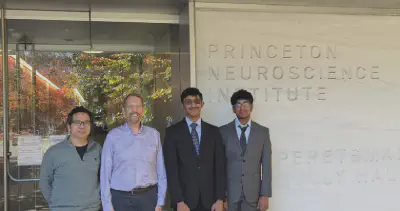

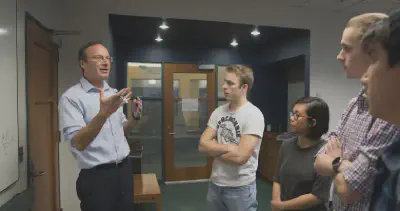

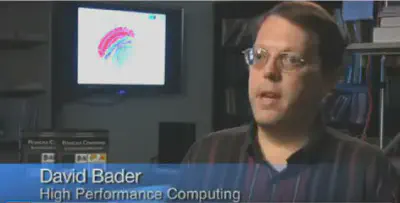

In this installment of our 7 Questions for Chapel Users series, we welcome David Bader, a Distinguished Professor in the Ying Wu College of Computing at the New Jersey Institute of Technology (NJIT). With a deep focus on high-performance computing and data science, David has consistently driven innovation in solving some of the most complex and large-scale computational problems. Read on to dive into his journey with Chapel, his current projects, and how tools like Arkouda and Arachne are accelerating data science at scale.

By Aaron Mok

GPUs, or graphic processing units, have become increasingly difficult to acquire as tech giants like OpenAI and Meta purchase mountains of them to power A.I. models. Amid an ongoing chip shortage, a crop of startups are stepping up to increase access to the highly sought-after A.I. chips—by renting them out.

By Jon Swartz

Big Tech is going nuclear in an escalating race to meet growing energy demands.

Amazon.com Inc., Alphabet Inc.’s Google and Microsoft Corp. are pouring billions of dollars into nuclear energy facilities to supply companies with emissions-free electricity to feed their artificial intelligence services.

By Clare Duffy and Dianne Gallagher, CNN

New York, CNN — The devastation in North Carolina in the wake of Hurricane Helene could have serious implications for a niche — but extremely important — corner of the tech industry.

By Daniel Munoz, NorthJersey.com

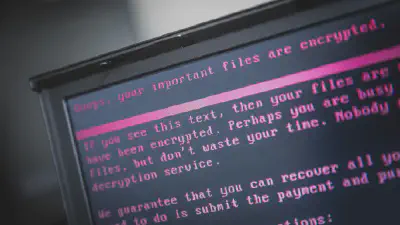

Pharmacy chain Rite Aid revealed this week that 2.2 million customers were exposed in a data breach in June.

The leaked data includes driver’s license numbers, addresses and dates of birth, Rite Aid said in a July 15 press release The stolen data was associated with completed attempted purchases made between June 6, 2017 and July 30, 2018, Rite Aid said.

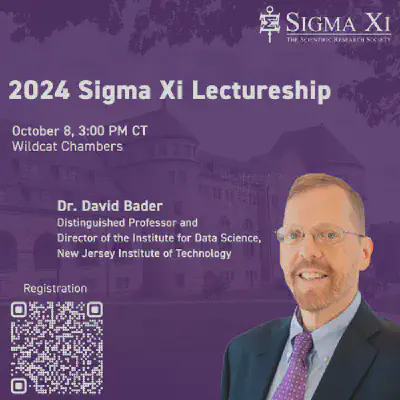

Dr. David Bader is a Distinguished Professor and a founder of the Department of Data Science in the Ying Wu College of Computing and Director of the Institute for Data Science at New Jersey Institute of Technology. He is also Chair of the NEBDHub Seed Fund Steering Committee. Earlier this year, David hosted a Masterclass with the NEBDHub. Watch to learn about open-source frameworks for massive-scale graph analytics.

By Clare Duffy, CNN

New York (CNN) – The world’s biggest chipmaker is working to resume operations following the massive earthquake that struck Taiwan Wednesday — a welcome sign for makers of products ranging from iPhones and computers to cars and washing machines that rely on advanced semiconductors.

Ian Krietzberg

Fast Facts

- Gladstone AI last week published a nearly 300-page government-commissioned report detailing the “catastrophic” risks posed by AI.

- TheStreet sat down with David Bader, the Director of the Institute for Data Science at New Jersey Institute of Technology, to break down the report.

- “Certainly there’s a lot of hype,” Bader said, “but there’s also a lot of real-world threats.”

Last week, Gladstone AI published a report — commissioned for $250,000 by the U.S. State Department — that detailed the apparent “catastrophic” risks posed by untethered artificial intelligence technology. It was first reported on by Time.

Written by: Evan Koblentz

Artificial intelligence, data science and the emerging field of quantum computing are among the hottest research topics in computing overall. David Bader, a distinguished professor in NJIT’s Ying Wu College of Computing and director of the university’s Institute for Data Science, shared his thoughts on big-picture questions about each area. Bader is known globally for his innovative work in the history and cutting-edge of computing.

By David Bader

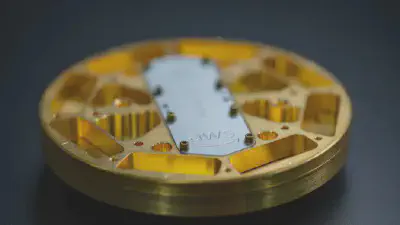

Quantum technologies harness the laws of quantum mechanics to solve complex problems beyond the capabilities of classical computers. Although quantum computing can one day lead to positive and transformative solutions for complex global issues, the development of these technologies also poses a significant and emerging threat to cybersecurity infrastructure for organizations.

Artificial intelligence (AI) lets researchers interpret human thoughts, and the technology is sparking privacy concerns. The new system can translate a person’s brain activity while listening to a story into a continuous stream of text. It’s meant to help people who can’t speak, such as those debilitated by strokes, to communicate. But there are concerns that the same techniques could one day be used to invade thoughts.

The New Jersey Big Data Alliance (NJBDA) is partnering with several organizations to provide several free workshops and panel discussions for data practitioners and those interested in big data. This workshop series is part of NJBDA’s effort to build and leverage collaborations to increase competitiveness, generate a highly skilled workforce, drive innovation and catalyze data-driven economic growth for New Jersey. This robust series of workshop events complements “Big Data in FinTech” – the NJBDA’s tenth Annual Symposium on May 9 at Seton Hall University.

Written by: Michael Giorgio

Today’s world is driven by data – and data science is what powers the engine in this rapidly expanding global ecosystem. To address the need for talent and knowledge in this emerging field, NJIT’s Departments of Data Science and Mathematical Sciences have launched a new Ph.D. in Data Science program, dedicated to growing the field and generating top-notch data scientists.

By: Martin Daks

An emerging technology harnesses the laws of quantum mechanics to solve problems too complex for classical computers. Quantum computing is expected to shatter barriers and turbocharge processes, from drug discovery to financial portfolio management. But this revolutionary new approach may also give hackers the ability to crack open just about any kind of digital “safe,” giving them access to trade secrets, sensitive communications and other mission-critical data. Last year, the threat prompted President Joe Biden to sign a national security memorandum, “Promoting United States Leadership in Quantum Computing While Mitigating Risks to Vulnerable Cryptographic Systems,” directing federal agencies to migrate vulnerable cryptographic systems to quantum-resistant cryptography. We spoke with some cybersecurity experts to find out what’s ahead.

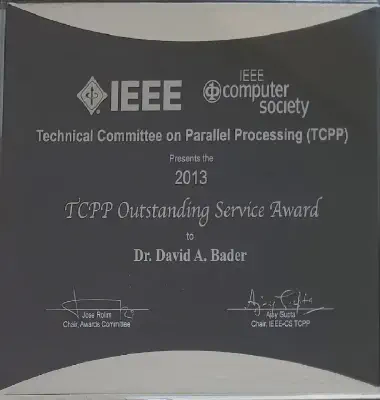

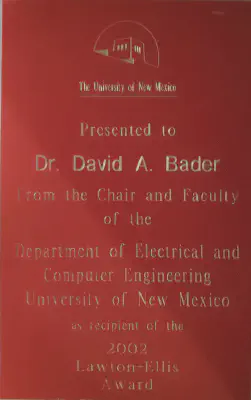

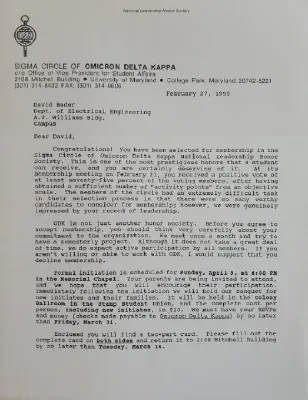

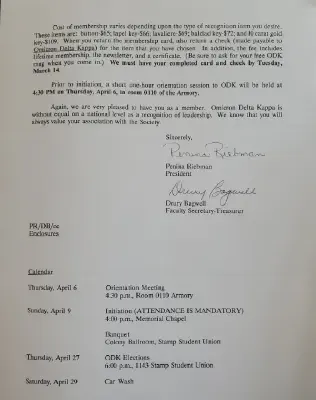

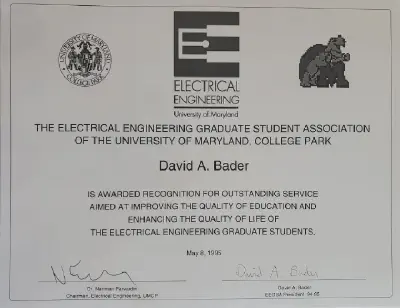

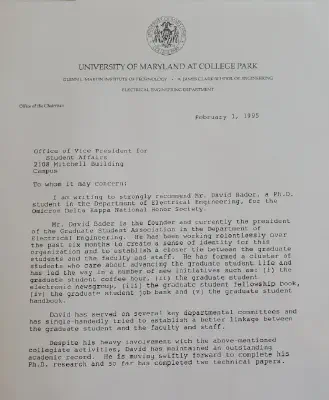

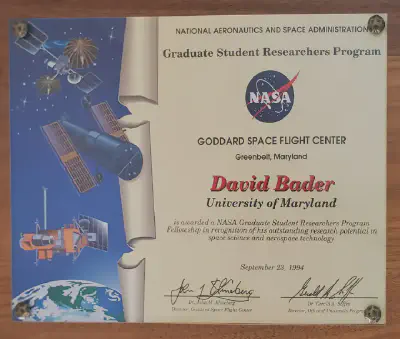

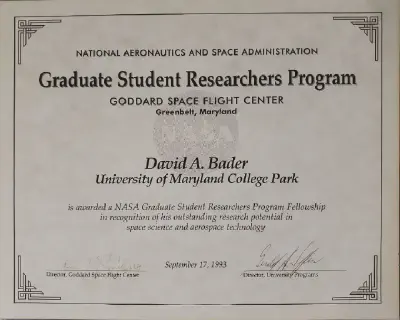

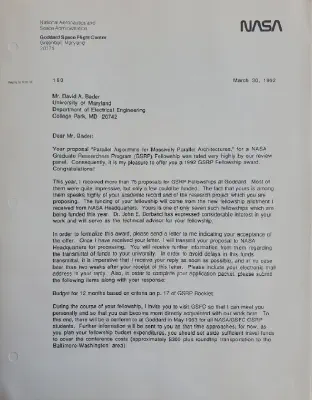

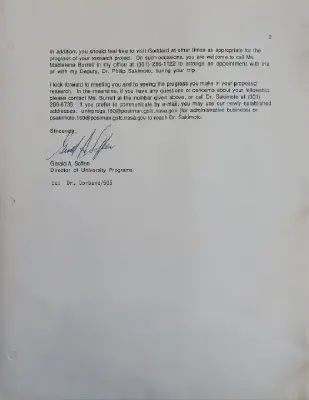

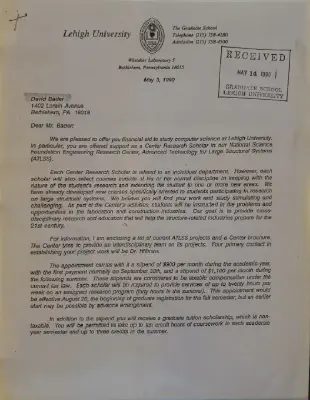

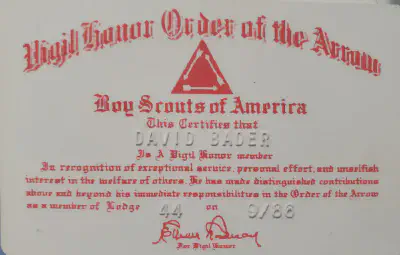

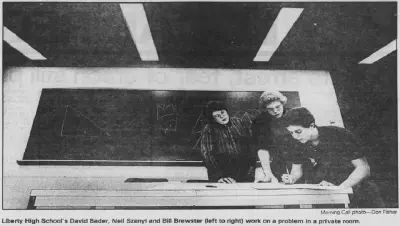

In recognition for his role in creating the Linux supercomputer, Department of Computer and Electrical Engineering (ECE) alumnus David A. Bader (Ph.D., ‘96, electrical engineering) will be inducted into the A. James Clark School of Engineering’s Innovation Hall of Fame (IHOF) on Wednesday, November 9—joining a small but distinguished community of inventors who use their knowledge, perseverance, innovation, and ingenuity to change how we do things in the world.

The Fall 2022 Booz Allen Hamilton Colloquium Series kicked off its 14th year last Friday. The first talk of the semester was given by Dr. Tim O’Shea, Chief Technology Officer of DeepSig, and hosted by Professor Sennur Ulukus. O’Shea’s talk was titled “Deep Learning in the Physical Layer: Building AI-Native Sensing and Communications Systems”.

- David A. Bader: https://davidbader.net/

- NJIT Institute for Data Science: https://datascience.njit.edu/

- Arkouda: https://github.com/Bears-R-Us/arkouda

- NJIT Data Science YouTube Channel: https://www.youtube.com/c/NJITInstituteforDataScience

ML Zoomcamp: https://github.com/alexeygrigorev/mlbookcamp-code/tree/master/course-zoomcamp

Join DataTalks.Club: https://datatalks.club/slack.html

Our events: https://datatalks.club/events.html

By John Russell

That supercomputers produce impactful, lasting value is a basic tenet among the HPC community. To make the point more formally, Hyperion Research has issued a new report, The Economic and Societal Benefits of Linux Supercomputers. Inclusion of Linux is fundamental here. The powerful, open source operating system was embraced early by the HPC world and helped spawn a huge HPC application ecosystem that makes these systems so broadly useful.

New York, NY, January 19, 2022—ACM, the Association for Computing Machinery, has named 71 members ACM Fellows for wide-ranging and fundamental contributions in areas including algorithms, computer science education, cryptography, data security and privacy, medical informatics, and mobile and networked systems ─ among many other areas. The accomplishments of the 2021 ACM Fellows underpin important innovations that shape the technologies we use every day.

Podcast:

The data science field is expanding because so many businesses and other institutions require skilled workers who can manage data as well as provide insights. Companies and students are clamoring for more academic programs. There is great need, but academic institutions are still transitioning to meet the demand. Dr. David Bader, Distinguished Professor and Director of the Institute for Data Science at the New Jersey Institute of Technology, explains how his school is leading the charge to create opportunities for more students to study data science.

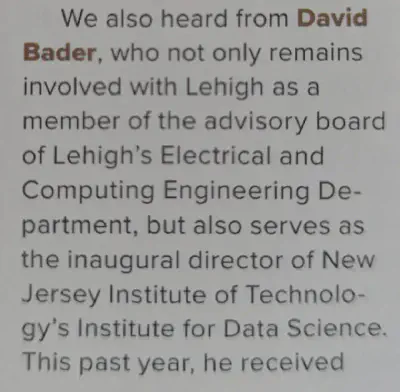

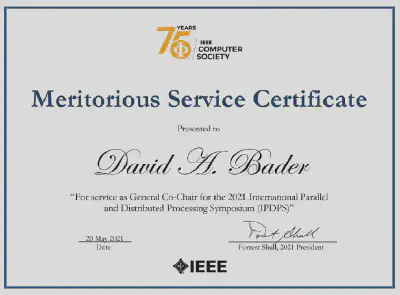

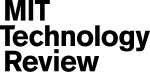

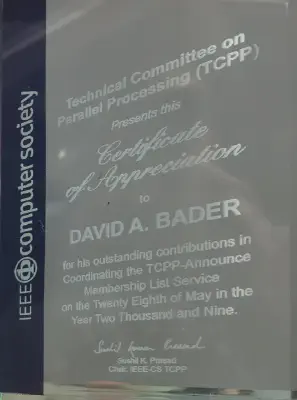

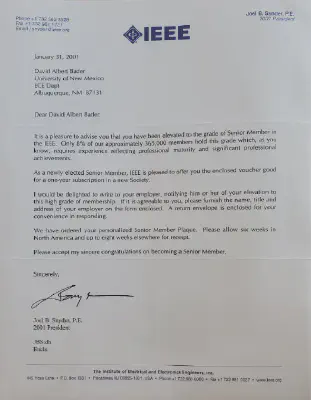

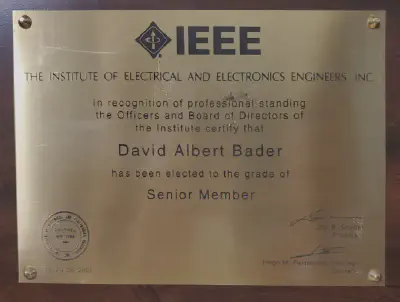

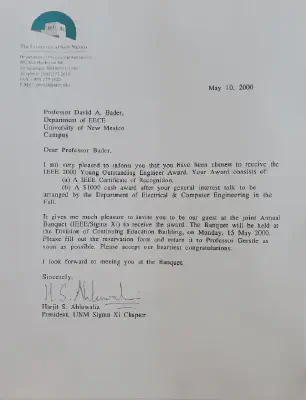

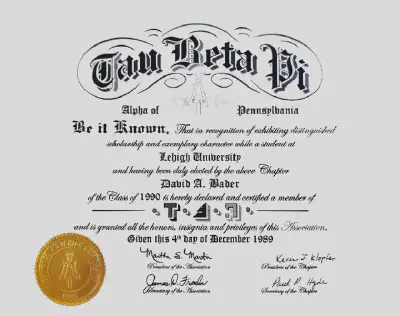

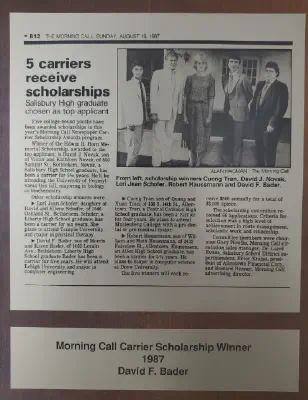

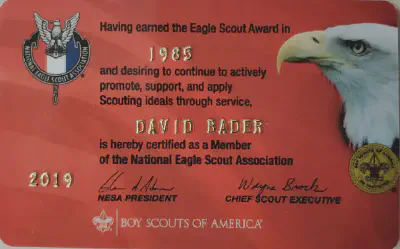

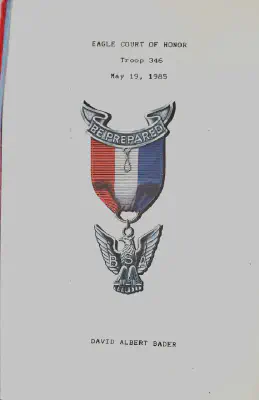

Electrical and computer engineering alum David Bader ’90 ’91G has been named as the 2021 recipient of the Sidney Fernbach Award, given by the IEEE Computer Society (IEEE CS).

Electrical and Computer Engineering Alumnus David Bader (Ph.D., ’96) is the recipient of the 2021 Sidney Fernbach Award from the IEEE Computer Society (IEEE CS). Bader is a Distinguished Professor and founder of the Department of Data Science, and inaugural Director of the Institute for Data Science, at the New Jersey Institute of Technology.

LOS ALAMITOS, Calif., 22 September 2021 – The IEEE Computer Society (IEEE CS) has named David Bader as the recipient of the 2021 Sidney Fernbach Award. Bader is a Distinguished Professor and founder of the Department of Data Science, and inaugural Director of the Institute for Data Science, at the New Jersey Institute of Technology.

LOS ALAMITOS, Calif., 22 September 2021 – The IEEE Computer Society (IEEE CS) has named David Bader as the recipient of the 2021 Sidney Fernbach Award. Bader is a Distinguished Professor and founder of the Department of Data Science, and inaugural Director of the Institute for Data Science, at the New Jersey Institute of Technology.

LOS ALAMITOS, Calif., 22 September 2021 – The IEEE Computer Society (IEEE CS) has named David Bader as the recipient of the 2021 Sidney Fernbach Award. Bader is a Distinguished Professor and founder of the Department of Data Science, and inaugural Director of the Institute for Data Science, at the New Jersey Institute of Technology.

By Eric Kiefer, Patch Staff

NEWARK, NJ — The following news release comes courtesy of NJIT. Learn more about posting announcements or events to your local Patch site.

Speakers at TEDxNJIT 2021 will explain how technology impacts everything from knee-replacement surgery and the monitoring of traumatic brain injuries to how we’ll live in the wake of the global pandemic.

Today we’re delving into the world of high performance data analytics with David A. Bader. Professor Bader is a Distinguished Professor in the Department of Computer Science at the New Jersey Institute of Technology (https://www.njit.edu/). Visit https://davidbader.net/ to learn more about Professor Bader.

New York City - February 2, 2021 – The New York State Board of Elections unanimoulsy rejected certification of a voting machine called the ExpressVote XL at a special late January meeting. The machine, made by ES&S, is referred to as a “hybrid” or “all-in-one” voting machine because it combines voting and tabulation in a single device. Rather than tabulating hand-marked paper ballots, the practice recommended by security experts, the ExpressVote XL generates a computer-printed summary card for each voter. The summary cards contain barcodes representing candidates’ names, and the machine tabulates votes from the barcodes. Security experts warn that the system “could change a vote for one candidate to be a vote for another candidate,” if it were hacked. Colorado, a leader in election security, has banned barcodes in voting, due to the high risk.

Written by: Evan Koblentz

TTI/Vanguard, a prestigious organization of technology industry executives who meet a few times each year to study and debate emerging innovations, chose to virtually visit New Jersey Institute of Technology this week for their latest intellectual retreat.

One of the most studied algorithms in computer science is called “Minimum Spanning Tree” or MST. In this problem, one is given a graph comprised of vertices and weighted edges, and asked to find a subset of edges that connects all of the vertices, and the total sum of their weights is as small as possible. Many real-world optimization problems are solved by finding a minumum spanning tree, such as lowest cost for distribution on road networks where intersections are vertices and weights could be length of the road or time to drive that segment. In 1926, Czech scientist Otakar Borůvka was the first to design an MST algorithm. Other famous approaches to solving MST are often given by the name of the scientist who designed MST algorithm in the late 1950’s such as Prim, Kruskal, and Dijkstra.

The Northeast Big Data Innovation Hub Seed Fund will be launching by this fall to support data science activities within our community. We are pleased to announce David Bader as Chair of the Seed Fund Steering Committee. Bader is a Distinguished Professor in the Department of Computer Science and inaugural Director of the Institute for Data Science at New Jersey Institute of Technology, and a leading expert in solving global grand challenges in science, engineering, computing, and data science. Our full Seed Fund Steering Committee met for the first time earlier this month, and is hard at work developing and executing on this exciting new community program.

Continuing its mission to lead in computing technologies, NJIT announced today that it will establish a new Institute for Data Science, focusing on cutting-edge interdisciplinary research and development in all areas pertinent to digital data. The institute will bring existing research centers in big data, medical informatics and cybersecurity together with new research centers in data analytics and artificial intelligence, cutting across all NJIT colleges and schools, and conduct both basic and applied research.

On August 5-6, 2019, I was invited to attend the Future Computing (FC) Community of Interest Meeting sponsored by the National Coordination Office (NCO) of NITRD. The Networking and Information Technology Research and Development (NITRD) Program is a formal Federal program that coordinates the activities of 23 member agencies to tackle multidisciplinary, multitechnology, and multisector cyberinfrastructure R&D needs of the Federal Government and the Nation. The meeting was held in Washington, DC, at the NITRD NCO office.

Professor David Bader will lead the new Institute for Data Science at the New Jersey Institute of Technology. Focused on cutting-edge interdisciplinary research and development in all areas pertinent to digital data, the institute will bring existing research centers in big data, medical informatics and cybersecurity together to conduct both basic and applied research.

Continuing its mission to lead in computing technologies, NJIT announced today that it will establish a new Institute for Data Science, focusing on cutting-edge interdisciplinary research and development in all areas pertinent to digital data. The institute will bring existing research centers in big data, medical informatics and cybersecurity together with new research centers in data analytics and artificial intelligence, cutting across all NJIT colleges and schools, and conduct both basic and applied research.

In January, Facebook invited university faculty to respond to a call for research proposals on AI System Hardware/Software Co-Design. Co-design implies simultaneous design and optimization of several aspects of the system, including hardware and software, to achieve a set target for a given system metric, such as throughput, latency, power, size, or any combination thereof. Deep learning has been particularly amenable to such co-design processes across various parts of the software and hardware stack, leading to a variety of novel algorithms, numerical optimizations, and AI hardware.

Georgia Tech, UC Davis, Texas A&M Join NVAIL Program with Focus on Graph Analytics

By Sandra Skaff

NVIDIA is partnering with three leading universities — Georgia Tech, the University of California, Davis, and Texas A&M — as part of our NVIDIA AI Labs program, to build the future of graph analytics on GPUs.

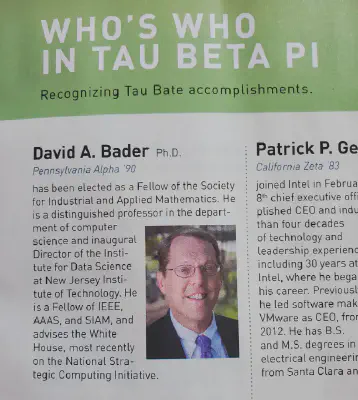

The Society for Industrial and Applied Mathematics selected 28 fellows for 2019 in recognition of their research and service to the community.

David A. Bader, a professor and chair of computational science and engineering at the Georgia Institute of Technology, for contributions in high-performance algorithms and streaming analytics and for leadership in the field of computational science.

SIAM Recognizes Distinguished Work through Fellows Program

Society for Industrial and Applied Mathematics (SIAM) is pleased to announce the 2019 Class of SIAM Fellows. These distinguished members were nominated for their exemplary research as well as outstanding service to the community. Through their contributions, SIAM Fellows help advance the fields of applied mathematics and computational science.

Georgia Tech high-performance computing (HPC) experts are gathered in Dallas this week to take part in the HPC community’s largest annual event — the International Conference for High Performance Computing, Networking, Storage, and Analysis — commonly referred to as Supercomputing. This year’s conference, SC’18, opened Sunday at the Kay Bailey Hutchison Convention Center Dallas and runs through Nov. 16.

School of Computational Science and Engineering Chair and Professor David Bader has been named Editor-in-Chief (EiC) of ACM Transactions on Parallel Computing (ACM ToPC).

ACM Transactions on Parallel Computing is a forum for novel and innovative work on all aspects of parallel computing, and addresses all classes of parallel-processing platforms, from concurrent and multithreaded to clusters and supercomputers.

In this video from PASC18, David Bader from Georgia Tech summarizes his keynote talk on Big Data Analytics.

“Emerging real-world graph problems include: detecting and preventing disease in human populations; revealing community structure in large social networks; and improving the resilience of the electric power grid. Unlike traditional applications in computational science and engineering, solving these social problems at scale often raises new challenges because of the sparsity and lack of locality in the data, the need for research on scalable algorithms, and development of frameworks for solving these real-world problems on high performance computers, and for improved models that capture the noise and bias inherent in the torrential data streams. In this talk, Bader will discuss the opportunities and challenges in massive data-intensive computing for applications in social sciences, physical sciences, and engineering.”

In this keynote video from PASC18, David Bader from Georgia Tech presents: Massive-Scale Analytics Applied to Real-World Problems.

“Emerging real-world graph problems include: detecting and preventing disease in human populations; revealing community structure in large social networks; and improving the resilience of the electric power grid. Unlike traditional applications in computational science and engineering, solving these social problems at scale often raises new challenges because of the sparsity and lack of locality in the data, the need for research on scalable algorithms and development of frameworks for solving these real-world problems on high performance computers, and for improved models that capture the noise and bias inherent in the torrential data streams. In this talk, Bader will discuss the opportunities and challenges in massive data-intensive computing for applications in social sciences, physical sciences, and engineering.”

NEW DELHI: Bennett University’s computer science and engineering (CSE) department held its first international conference on machine learning and data science at its Greater Noida campus that saw researchers and academicians deliberating on the new wave of technologies and their impact on the world of big data, machine learning and artificial intelligence (AI).

(Georgia Tech, Atlanta, GA, 10 July 2017) The Georgia Institute of Technology and the University of Southern California Viterbi School of Engineering have been selected to receive Department of Defense Research Projects Agency (DARPA) funding under the Hierarchal Identify Verify Exploit (HIVE) program. Georgia Tech and USC are to receive total funding of $6.8 million over 4.5 years to develop a powerful new data analysis and computing platform.

Georgia Tech Professor David Bader, chair of the School of Computational Science and Engineering (CSE), participated in the National Strategic Computing Initiative (NSCI) Anniversary Workshop in Washington D.C., held July 29. Created in 2015 via an Executive Order by President Barack Obama, the NSCI is responsible for ensuring the United States continues leading in high-performance computing (HPC) in coming decades.

By Michael Byrne

Earlier this week, President Obama signed an executive order creating the National Strategic Computing Initiative, a vast effort at creating supercomputers at exaflop scales. Cool. An exaflop-scale supercomputer is capable of 1018 floating point operations (FLOPS) per second, which is a whole lot of FLOPS.

By Katherine Noyes, Senior U.S. Correspondent, IDG News Service

Hard on the heels of the publication of the latest Top 500 ranking of the world’s fastest supercomputers, IBM and Nvidia on Monday announced they have teamed up to launch two new supercomputer centers of excellence to develop the next generation of contenders.

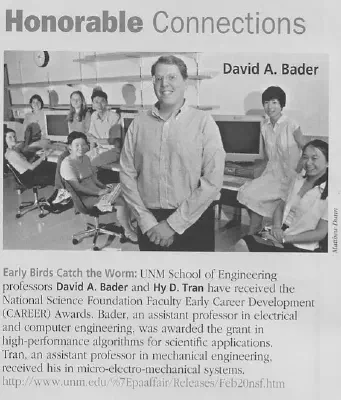

Following a national search for new leadership of its School of Computational Science and Engineering (CSE), Georgia Tech’s College of Computing has selected its own David A. Bader, a renowned leader in high-performance computing, to chair the school.

By Tiffany Trader

Researchers at Georgia Institute of Technology and University of Southern California will receive nearly $2 million in federal funding for the creation of tools that will help developers exploit hardware accelerators in a cost-effective and power-efficient manner. The purpose of this three-year NSF grant is to bring formerly niche supercomputing capabilities into the hands of a more general audience to help them achieve high-performance for applications that were previously deemed hard to optimize. The project will involve the use of tablets, smart phones and other Internet-era devices, according to David Bader, the lead principal investigator.

In this guest feature from Scientific Computing World, Georgia Institute of Technology’s David A. Bader discusses his upcoming ISC’13 session, Better Understanding Brains, Genomes & Life Using HPC Systems.

Supercomputing at ISC has traditionally focused on problems in areas such as the simulation space for physical phenomena. Manufacturing, weather simulations and molecular dynamics have all been popular topics, but an emerging trend is the examination of how we use high-end computing to solve some of the most important problems that affect the human condition.

In our last few posts we discussed the fact that over 90% supercomputers (94.2% to be precise) employ Linux as their operating system. In this post, a sequel to our last posts, we shall attempt to investigate the potentials of Linux which make it suitable and perhaps the best choice for supercomputers OS.

By Derrick Harris

It’s not easy work turning the Mayberry Police Department into the team from C.S.I., or turning an idea for a new type of social network analysis into something like Klout on steroids, but those types of transformations are becoming increasingly more possible. The world’s universities and research institutions are hard at work figuring out ways to make the mountains of social data generated every day more useful and, hopefully, to make us realize there’s more to social data than just figuring out whose digital voice is the loudest.

By Nicole Hemsoth

The world lost one of its most profound science fiction authors in the early eighties, long before the flood of data came down the vast virtual mountain.

It was a sad loss for literature, but it also bore a devastating hole in the hopes of those seeking a modern fortuneteller who could so gracefully grasp the impact of the data-humanity dynamic. Dick foresaw a massively connected society—and all of the challenges, beauties, frights and potential for invasion or (or safety, depending on your outlook).

By Robert Gelber

Search giant Google along with researchers from Stanford University have made an interesting discovery based on an X labs project. After being fed 10 million images from YouTube, a 16,000-core cluster learned how to recognize various objects, including cats. Earlier this week, the New York Times detailed the program, explaining its methods and potential use cases.

Supercomputing performance is getting a new measurement with the Graph500 executive committee’s announcement of specifications for a more representative way to rate the large-scale data analytics at the heart of high-performance computing. An international team that includes Sandia National Laboratories announced the single-source shortest-path specification to assess computing performance on Tuesday at the International Supercomputing Conference in Hamburg, Germany. The latest benchmark “highlights the importance of new systems that can find the proverbial needle in the haystack of data,” said Graph500 executive committee member David A. Bader, a professor in the School of Computational Science and Engineering and executive director of High-Performance Computing at the Georgia Institute of Technology. The new specification will measure the closest distance between two things, said Sandia National Laboratories researcher Richard Murphy, who heads the executive committee. For example, it would seek the smallest number of people between two people chosen randomly in the professional network LinkedIn, finding the fewest friend of a friend of a friend links between them, he said. Read the full article. Learn more about Graph500 Benchmark.

In 2011, The University of Maryland’s Department of Electrical and Computer Engineering established the Distinguished Alumni Award to recognize alumni who have made significant and meritorious contributions to their fields. Alumni are nominated by their advising professors or the department chair, and the Department Council then approves their selection. In early May, the faculty and staff gather to honor the recipients at a luncheon.

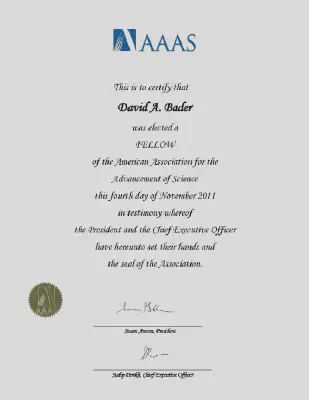

In November 2011, the AAAS Council elected 539 members as Fellows of AAAS. These individuals will be recognized for their contributions to science and technology at the Fellows Forum to be held on 18 February 2012 during the AAAS Annual Meeting in Vancouver, British Columbia. The new Fellows will receive a certificate and a blue and gold rosette as a symbol of their distinguished accomplishments.

The Pentagon is currently working to prevent another WikiLeaks situation within the department. Wired.com recently reported a group of military funded scientists are developing a sophisticated computer system that can scan and interpret every key stroke, log-in and uploaded files over the Pentagon’s networks.

By Julian Sanchez

I wrote on Monday that a cybersecurity bill overwhelmingly approved by the House Permanent Select Committee on Intelligence risks creating a significantly broader loophole in federal electronic surveillance law than its boosters expect or intend. Creating both legal leeway and a trusted environment for limited information sharing about cybersecurity threats—such as the idenifying signatures of malware or automated attack patterns—is a good idea. Yet the wording of the proposed statute permits broad collection and disclosure of any information that would be relevant to protecting against “cyber threats,” broadly defined. For now, that mostly means monitoring the behavior of software; in the near future, it could as easily mean monitoring the behavior of people.

By Darlene Storm

Homeland Security Director Janet Napolitano said the “risk of ’lone wolf’ attackers, with no ties to known extremist networks or grand conspiracies, is on the rise as the global terrorist threat has shifted,” reported CBSNews. An alleged example of such a lone wolf terror suspect is U.S. citizen Jose Pimentel, who learned “bomb-making on the Internet and considered changing his name to Osama out of loyalty to Osama bin Laden.” He was arrested on charges of “plotting to blow up post offices and police cars and to kill U.S. troops.” But the CSMonitor reported the FBI decided Pimentel was not a credible threat. It’s unlikely Pimentel will be able to claim “entrapment” since he “left muddy footprints on the Internet” which proves “his intent was to cause harm.” The grand jury decision against Pimentel was delayed until January, as others described “the Idiot Jihadist Next Door” as just another “homegrown U.S. terrorist wannabe.”

By Rich Brueckner, insideHPC

In this video, Professor David Bader from Georgia Tech discusses his participation in the DARPA ADAMS project. The Anomaly Detection at Multiple Scales (ADAMS) program uses Big Data Analytics to look for cleared personnel that might be on the verge of “Breaking Bad” and becoming internal security threats.

Seattle-based CODONiS, a provider of advanced computing platforms for life sciences and healthcare, has teamed up with scientists from the world-renowned Institute for Systems Biology, a nonprofit research organization in Seattle, to advance biomedical computing for future personalized healthcare. The results from this ground-breaking collaboration will be discussed at the “Personalized Healthcare Challenges for High Performance Computing” panel discussion being held at the SC11 Conference in Seattle on November 15, 2011.

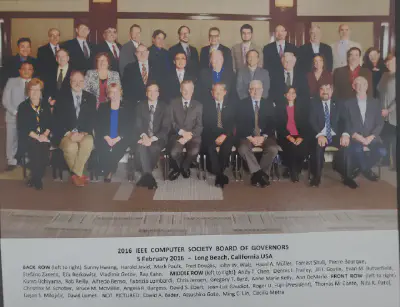

In 2010, David A. Bader received the IEEE Computer Society Golden Core Award. A plaque is awarded for long-standing member or staff service to the society. This program was initiated in 1996 with a charter membership of 450. Each year the Awards Committee will select additional recipients from a continuing pool of qualified candidates and permanently include their names in the Golden Core Member master list.

By Michael Feldman

The US Defense Advanced Research Projects Agency (DARPA) has selected four “performers” to develop prototype systems for its Ubiquitous High Performance Computing (UHPC) program. According to a press release issued on August 6, the organizations include Intel, NVIDIA, MIT, and Sandia National Laboratory. Georgia Tech was also tapped to head up an evaluation team for the systems under development. The first UHPC prototype systems are slated to be completed in 2018.

By Michael Feldman

Tuesday marks the first full day of the conference technical program. This year’s conference keynote will be given by Michael Dell, chairman and CEO of Dell, Inc. Dell’s selection reflects both the changing face of the industry, and the conference’s location – Dell is headquartered about 20 miles north of Austin in Round Rock, Texas.

Third Annual High Performance Computing Day at Lehigh

http://www.lehigh.edu/computing/hpc/hpcday.html

Friday, April 4, 2008

Featured Keynote Speaker

David Bader ‘90, ‘91G

Petascale Phylogenetic Reconstruction of Evolutionary Histories

http://www.lehigh.edu/computing/hpc/hpcday/2008/hpckeynote.html

Executive Director of High Performance Computing

College of Computing, Georgia Institute of Technology

The National Academy of Engineering (NAE) has selected two Georgia Tech faculty — College of Computing Associate Professor David Bader and Mechanical Engineering Assistant Professor Samuel Graham — to participate in the NAE’s annual Frontiers of Engineering symposium, a three-day event that will bring together engineers ages 30 to 45, who are performing cutting- edge engineering research and technical work in a variety of disciplines. Participants were nominated by fellow engineers or organizations and chosen from 260 applicants. The symposium will be held Sept. 24-26 at Microsoft Research in Redmond, Wash., and will examine trustworthy computer systems, safe water technologies, modeling and simulating human behavior, biotechnology for fuels and chemicals and the control of protein conformations. For more information about Frontiers of Engineering, visit www.nae.edu/frontiers.

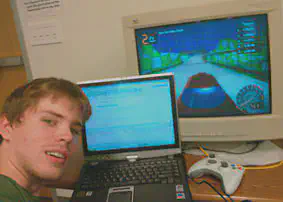

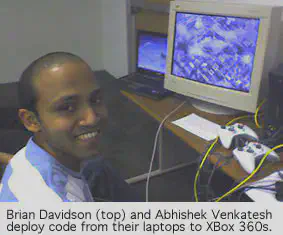

Georgia Tech is one of the first universities to deploy the IBM BladeCenter QS20 Server for production use, through Sony-Toshiba-IBM (STI) Center of Competence for the Cell Broadband Engine (http://sti.cc.gatech.edu/) in the College of Computing at Georgia Tech. The QS20 uses the same ground-breaking Cell/B.E. processor appearing in products such as Sony Computer Entertainment’s PlayStation3 computer entertainment system, and Toshiba’s Cell Reference Set, a development tool for Cell/B.E. applications.

Georgia Tech will be hosting a two-day workshop on software and applications for the Cell Broadband Engine, to be held on Monday, June 18 and Tuesday, June 19, at the Klaus Advanced Computing Building, (http://www.cc.gatech.edu/ ) at Georgia Institute of Technology, in Atlanta, GA, United States. The workshop is sponsored by Georgia Tech and the Sony, Toshiba, IBM, (STI) Center of Competence for the Cell BE.

The College of Computing at Georgia Tech today announced its designation as the first Sony-Toshiba-IBM (STI) Center of Competence focused on the Cell Broadband Engine™ (Cell BE) microprocessor. IBM® Corp., Sony Corporation and Toshiba Corporation selected to partner with the College of Computing at Georgia Tech to build a community of programmers and broaden industry support for the Cell BE processor.

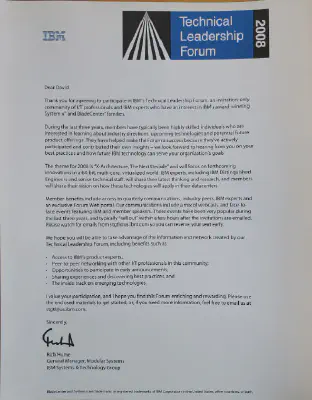

Congratulations to Associate Professor David Bader who recently received a 2006 IBM Faculty Award in recognition of his outstanding achievement and importance to industry. The highly competitive award, valued at $40,000, was given to Bader for making fundamental contributions to the design and optimization of parallel scientific libraries for multicore processors, such as the IBM Cell. As an international leader in innovation for the most advanced computing systems, IBM recognizes the strength of collaborative research with the College of Computing at Georgia Tech’s Computational Science and Engineering (CSE) division.

Ph.D. alumnus David Bader ‘96, Associate Professor of Computational Science and Engineering at Georgia Tech, has joined the Technical Advisory Board of DSPlogic, a provider of FPGA-based, reconfigurable computing and signal processing products and services. Bader has been a pioneer in the field of high performance computing for problems in bioinformatics and computational genomics, and has co-authored over 75 articles in peer-reviewed journals and conferences. His main areas of research are in parallel algorithms, combinatorial optimization, and computational biology and genomics.

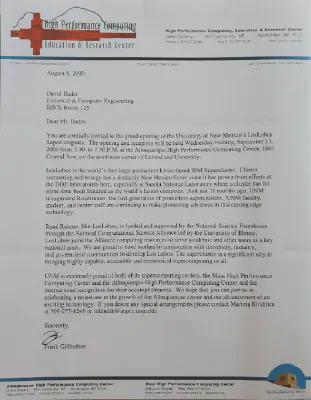

Using the largest open-production Linux supercluster in the world, LosLobos, researchers at The University of New Mexico’s Albuquerque High Performance Computing Center ( http://www.ahpcc.unm.edu ) have achieved a nearly one-million-fold speedup in solving the computationally-hard phylogeny reconstruction problem for the family of twelve Bluebell species (scientific name: Campanulacae) from the flowers’ chloroplast gene order data. (The problem size includes a thirteenth plant, Tobacco, used as a distantly-related outgroup). Phylogenies derived from gene order data may prove crucial in answering some fundamental open questions in biomolecular evolution. Yet very few techniques are available for such phylogenetic reconstructions.

IEEE Concurrency ’s home page (http://computer.org/concurrency/) now includes a link to ParaScope, a comprehensive listing of parallel computing sites on the Internet. The list is maintained by David A. Bader, assistant professor in the University of New Mexico’s Department of Electrical and Computer Engineering. You can also go directly to the links at http://computer.org/parascope/ #parallel.

By Alan Beck, managing editor

Contrary to strident popular opinion, the enervating quality of the Web is generated more through an abundance of information rather than an accumulation of moral deficits. Nowhere is this more evident than in the enormous collection of sites dealing with HPC. This new column, slated to appear quarterly, is not meant to provide a thorough compendium of HPC-related material on the Web; several resources already perform that function quite admirably, e.g. David A. Bader’s page, http://www.umiacs.umd.edu/~dbader/sites.html, CalTech’s list, http://www.ccsf.caltech.edu/other_sites.html, Jonathan Hardwick’s page, http://www.cs.cmu.edu/~scandal/resources.html and others. Nor can it hope to review sites of inordinate significance; while journalists may lay legitimate claim to insight, they cannot do the same for prescience.